Abhishek Narayan, CEO of Growing Pro Technologies, an IT industry leader specializing in digital and AI solutions.

In the age of generative AI and ubiquitous data collection, innovation is no longer just about speed, scale or disruption. It’s about trust. We are sprinting into a future where deepfakes, algorithmic surveillance and synthetic media can outpace regulation—and even human comprehension. The question now is not simply how to innovate but how to innovate ethically in a landscape where trust, truth and transparency are under constant scrutiny.

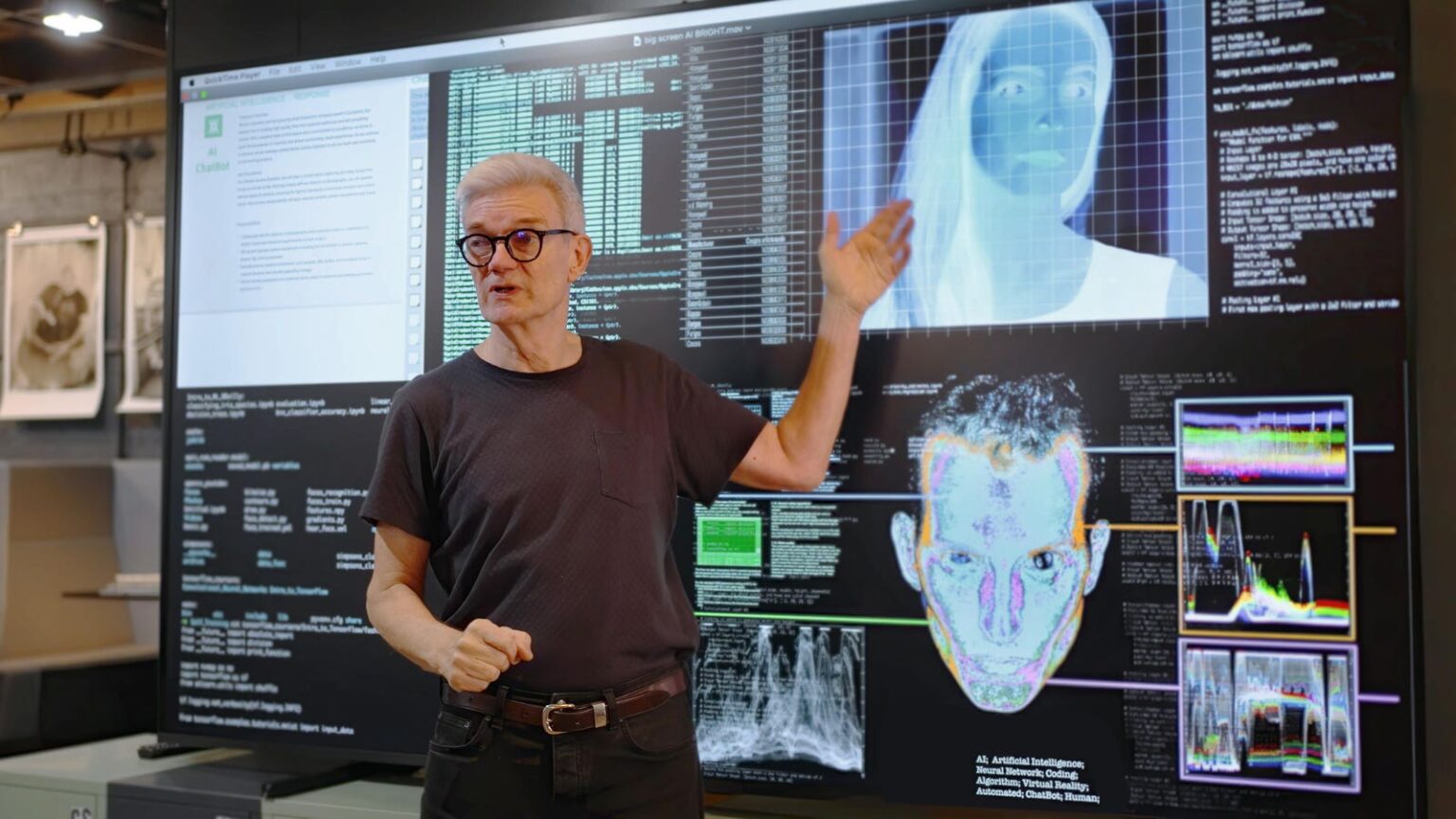

Deepfakes: The New Face Of Digital Deception

A few years ago, deepfakes were a novelty—experimental clips of celebrities or politicians saying things they never actually said. Today, they’re a global security risk.

We’ve already seen real-world consequences. A multinational firm in Hong Kong was tricked out of $25 million through a deepfake video call impersonating a senior executive. The attackers used generative AI to clone the person’s face and voice with such precision that even seasoned employees didn’t suspect anything was off. This raises the question: Are we enabling innovation that erodes the very fabric of reality?

The Ethics Gap: A Quiet But Critical Risk

Despite the scale of AI development, most companies are still behind when it comes to ethical governance. As of 2021, “only 20% of enterprises had an AI ethics framework in place.” Meanwhile, over half of developers admitted they had worked on a project where they felt unsure about the ethical implications but had no process or person to raise concerns with. This ethics gap is dangerous. Not only for public trust but also for long-term business sustainability.

As new AI capabilities roll out faster than laws can keep up, the burden of responsibility falls on the creators. When things go wrong—like biased algorithms, data leaks or deepfakes—reputation, fines, lawsuits and customer backlash quickly follow.

Global Regulation: A Patchwork In Progress

Governments worldwide are beginning to respond, but progress remains uneven. The EU AI Act, finalized in 2024, is “the world’s first comprehensive AI law.” It categorizes systems into four risk levels—ranging from minimal to unacceptable—and bans uses like real-time biometric surveillance in public spaces, with violations carrying fines up to €35 million or 7% of global revenue. The U.S., by contrast, has taken a more fragmented approach, relying on state laws and federal guidance that emphasize transparency over strict regulation.

China’s 2023 law on deepfakes requires clear labeling of synthetic media and identity verification for platforms creating it, while India is preparing its Digital India Act, expected to include AI guidelines. Yet in the absence of global consensus, companies must navigate a patchwork of standards—often reacting too late. Forward-thinking organizations, however, are going further, proactively shaping their own internal ethical frameworks.

How Tech Leaders Can Lead With Ethics

Leading with ethics means embedding ethical practices deeply within the organization’s culture, its workflows and its product life cycle. Ethical leadership in tech is not an afterthought—it’s a continuous process that involves ongoing commitment, thoughtful decision-making and the courage to lead by example. Here’s how tech leaders can take actionable steps toward ethical innovation:

Embed ethics in product design.

Ethics shouldn’t be something that’s bolted onto a product as a compliance measure once it’s built. Instead, it should be integrated into the product design and development process from the very beginning. This means creating a culture where engineers, product managers and designers think critically about the implications of their work—not just for the business, but for society as a whole.

Create internal AI governance structures.

As AI becomes more integrated into the products and services we offer, it’s crucial to ensure that the AI models we build are fair, transparent and safe. This goes beyond just adhering to laws—it’s about fostering trust with consumers, partners and employees. Internal governance ensures that AI is developed, tested and deployed in a way that minimizes harm and maximizes benefits.

Train for ethical awareness.

Ethics isn’t just the responsibility of the leadership team—it’s something that should permeate the entire organization. One of the most effective ways to do this is through ongoing training. Ethical awareness can help employees at all levels identify when a product or decision might cause harm, be biased or infringe upon privacy.

Go beyond compliance.

Compliance with laws and regulations, while crucial, is just the baseline. Ethical leadership means going above and beyond what is legally required. Transparency, especially in data collection, AI usage and algorithmic decision-making, is vital to building long-term trust with your users.

Collaborate across the industry.

Tech companies often compete fiercely, but when it comes to ethics, collaboration is essential. Tech leaders must work together to set shared industry standards, establish guidelines and develop a collective approach to common ethical challenges.

Is Ethical Innovation Possible?

Yes—but only for those willing to lead with foresight and integrity.

Innovation that disconnects from ethics may yield short-term gains, but it risks long-term harm—to people, to institutions and to the trust that binds technology to society. The world doesn’t just need smarter algorithms or faster processors—it needs a new kind of leadership.

So, instead of asking yourself what your technology can do, ask yourself what it should do. That answer will define the future.

Forbes Business Council is the foremost growth and networking organization for business owners and leaders. Do I qualify?

Read the full article here