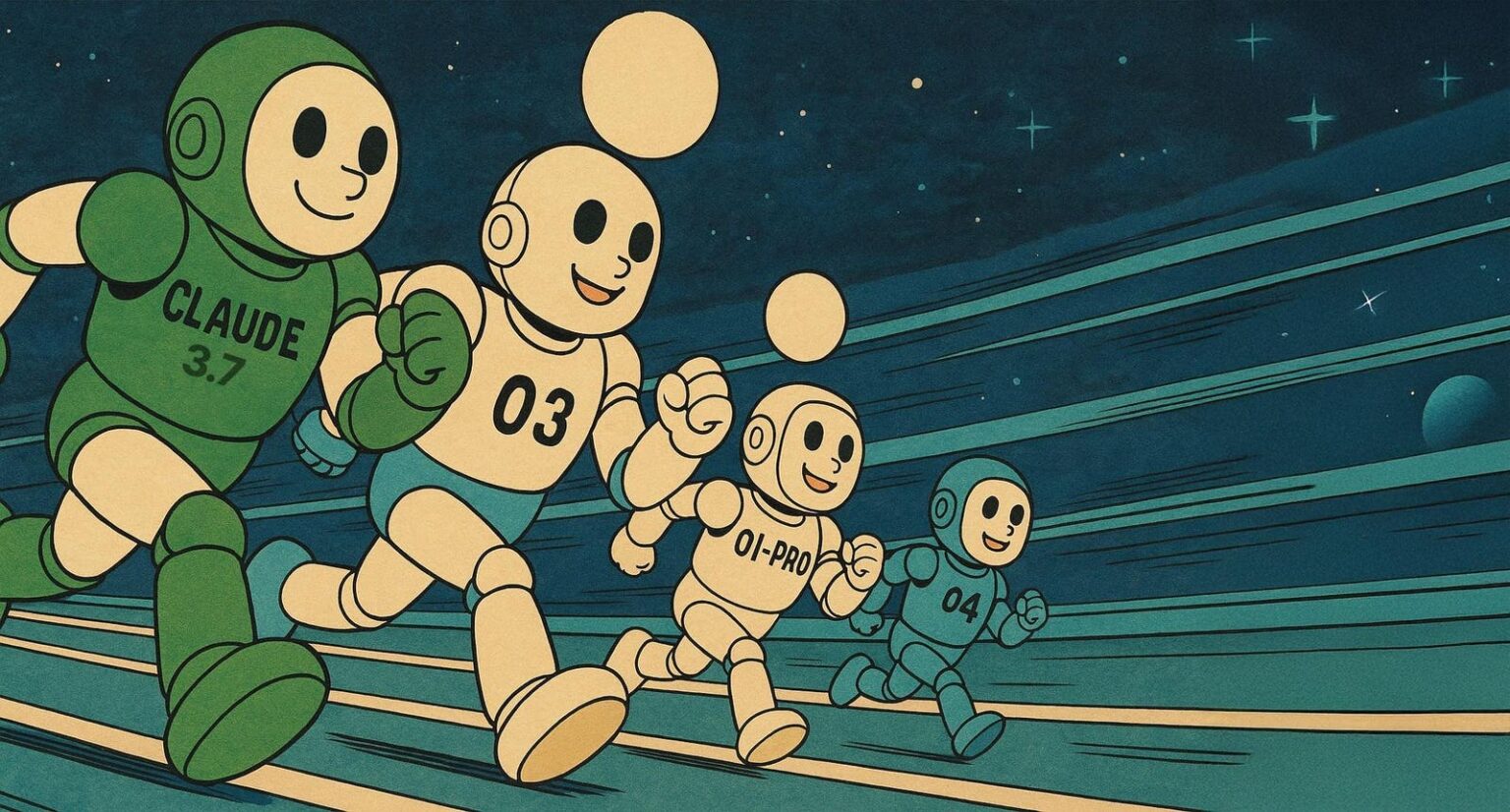

On the heels of the recent GPT-4.1 announcement, OpenAI has unveiled a whole set of new, more powerful models. As someone who relies on AI daily for everything from research questions to finding local sports information, I couldn’t wait to put these models through their paces. Below are my first impressions, along with some practical comparisons.

Before diving in, an important caveat: AI performance is non-deterministic and highly use-case specific. In simpler terms, Your Mileage May Vary. Don’t take this (or any other) article as the final word—instead, test these models on your own scenarios to determine what works best for you.

For example, I don’t use AI to explain homework assignments. Instead, I ask questions like “Is there scientific merit to drinking alkaline water?” or “Are there lacrosse clubs around San Jose that have produced D1 commitments?” or request explanations of options math. Your needs may differ significantly.

In my recent Forbes article, “The AI Economy Paradox: When $20 No Longer Buys $200 Worth of Intelligence”, I discussed how AI pricing and value propositions are evolving. This comparison builds on that analysis, looking at the practical performance differences across the latest models.

When You Need to Dig Deep:

- OpenAI’s Deep Research mode continues to outshine competitors for comprehensive analysis. I’ll keep this in my toolkit for when depth matters.

- o1-Pro can’t search the web and relies solely on its internal knowledge, but it reasons more powerfully than other models. This makes it invaluable for complex analytical tasks where reasoning trumps recency.

- o3-Pro is slated for release in the coming weeks, and I’m eager to see if it can combine the best attributes of the options above—potentially giving us the best of both worlds.

As a Daily Workhorse:

- Claude 3.7 (the premium version with tools and “thinking” unlocked) may actually provide better practical value than the options above for most users. It’s roughly 10x cheaper and significantly faster, while still acing many everyday tasks. I’ll continue using it for quick requests where its capabilities shine.

- o3 performs admirably as well, offering slightly more detailed responses. This additional detail can be either a benefit (when learning) or a drawback (when seeking concise answers), depending on your needs. I’m continuing to test it as my potential go-to option.

I also experimented with o4-mini-high, but it underperformed in enough of my test cases that I’ll stick with the models mentioned above. Again, your experience might differ based on your specific use cases.

I hope these practical insights help you navigate the ever-evolving AI landscape. If you have your own side-by-side comparisons—whether insightful or amusing—please share them in the comments.

Read the full article here